Neural4D: The Unified Asset Engine for the Content Economy

In the current digital landscape, the entire 3D industry faces a synchronized operational bottleneck known as Asset Scarcity. This crisis affects everyone from boutique collectible studios to AAA game developers. The demand for immersive content has outpaced the human capacity to create it. Production pipelines for films, games, and physical products are universally stalled by the sheer volume of assets required to make digital worlds feel real.

Neural4D addresses this industry-wide deficit not by replacing the artist, but by enforcing the “80/20 Workflow Rule”. By automating the 80% of foundational asset production, including set dressing, props, and prototypes, we free up human talent. This allows artists to focus on the 20% of “Hero Assets” that define the project’s value.

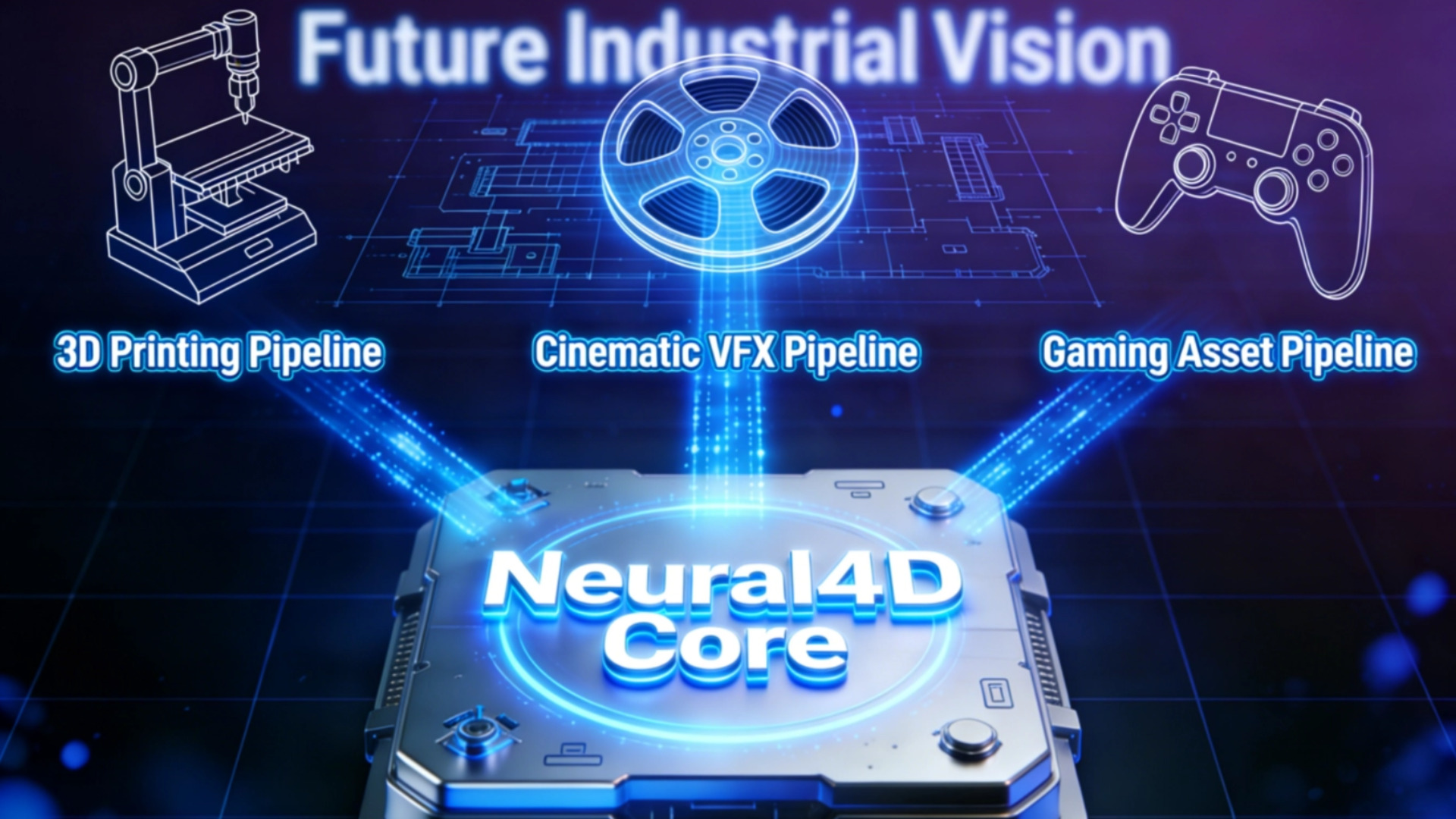

Here is how Neural4D serves as the unified infrastructure across the three pillars of digital production and the future roadmap for industrial applications.

Table of Contents

1. Manufacturing & Collectibles: Bridging Screen and Reality

Across the collectible and manufacturing sectors, the challenge has shifted from designing to materializing. The industry struggles with legacy AI models that generate “visual illusions.” These are meshes that appear coherent from a fixed angle but contain non-manifold edges, holes, and inverted normals when exported for physical production.

The Solution: Structural Integrity via Direct3D-S2

For digital collectibles and high-end static displays, geometric validity is binary. It is either printable or it is not. Neural4D uses the Direct3D-S2 architecture to construct geometry in a continuous volumetric space. This ensures the output is a closed, manifold volume by default.

✅ Voxel Resolution: The system operates at a 2048³ voxel resolution. This density preserves the micro-surface details of a sculpt, such as fabric texture or skin pores, ensuring they remain visible after the physical casting or injection molding process.

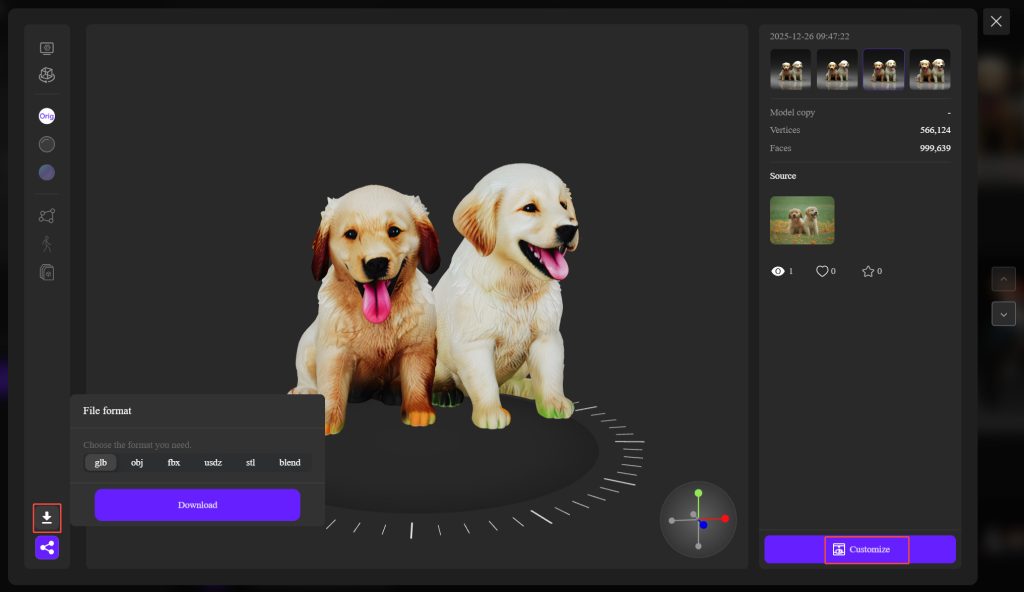

✅ Native STL Export: Unlike surface-based reconstruction methods, Neural4D generates assets ready for STL export. This eliminates the mesh repair phase in software like Magics or Netfabb. Engineers can send generated models directly to the slicer for rapid prototyping without intermediate repair steps.

2. Animation & VFX: Accelerating the Visual Narrative

In the VFX and animation sectors, the trend is moving toward Virtual Production and real-time pre-visualization. The industry standard has shifted from “fix it in post” to “fix it on set.” However, layout artists still spend disproportionate amounts of time modeling background debris, rocks, and vegetation. This “invisible” work consumes a significant portion of the art budget.

The Solution: Infinite Set Dressing & PBR Consistency

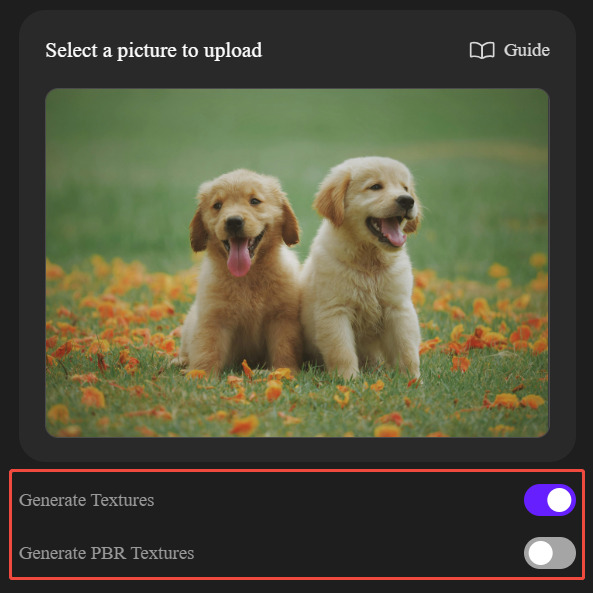

Neural4D acts as an infinite prop department. It allows artists to batch-generate diverse environmental assets to populate a scene in minutes. The critical differentiator here is Material Decoupling.

✅ Albedo Integrity: Many generative models bake lighting information, including shadows and highlights, into the texture map. Neural4D generates neutral Albedo maps by separating color from illumination.

✅ Full Material Stack: Simultaneously, the API outputs Normal, Roughness, and Metallic maps. This ensures that when an asset is dropped into a ray-traced pipeline like Maya/Arnold or Blender/Cycles, it reacts physically correctly to the director’s lighting choices. A generated rock will reflect light differently than generated moss. This maintains visual consistency with handcrafted assets.

Read More: Best Blender Alternatives for 3D Artists

3. Gaming & Interaction: Optimizing for Runtime Performance

The gaming sector faces the most brutal trade-off between Scale and Performance. Modern open-world games require thousands of unique objects to avoid repetition. Yet, each object adds a draw call that impacts frame rate. Developers are constantly battling against bloated, unoptimized AI meshes that degrade performance.

The Solution: Topology-Aware Asset Population

Neural4D provides a scalable solution for Asset Population with a focus on the vertex-to-fidelity ratio.

✅ Clean Topology: The output meshes prioritize quad-dominant structures where possible. This reduces shading artifacts and simplifies the UV unwrapping process.

✅ Engine Readiness: Assets export in GLB and FBX formats with normalized scale and centered pivots. This allows technical artists to populate open-world grids programmatically via the API. It significantly reduces the “Time-to-Import” for thousands of environmental props in engines like Unreal Engine 5 or Unity.

Read More: Why Your Next Project Needs an Image to 3D API

4. The Transmedia Synergy: One Source, Multiple Outputs

The defining characteristic of the modern media landscape is convergence. An IP is no longer just a movie. It is simultaneously a game, a VR experience, and a line of physical merchandise.

Neural4D facilitates this Transmedia Synergy. From a single generation seed within our pipeline, a studio can derive a unified “Source of Truth” that flows into multiple utility streams:

- High-Density Mesh: Exported as OBJ for the cinematic trailer or marketing stills.

- Optimized LOD (Level of Detail): Exported as GLB for mobile game integration or web-based AR viewers.

- Watertight STL: Exported for the production of the collector’s edition figurine.

This unified workflow ensures that the visual identity of the asset remains consistent regardless of where the audience encounters it. It also eliminates the need to remodel the same asset three times.

5. Future Outlook: From Visuals to Industrial Simulation

Today, Neural4D is solving the critical problems of Visual Representation and Geometric Topology that plague the creative industries. We are streamlining the “Art Pipeline.”

However, looking ahead, the Direct3D architecture is evolving toward a new frontier: High-Fidelity Industrial Simulation. The long-term vision is to move beyond “looking real” to “acting real.”

Future iterations of Neural4D aim to generate assets with accurate physical properties, including mass, density, and stress handling. This will allow AI-generated models to be used not just in games, but in engineering simulations and industrial testing within CAD environments. This marks the inevitable transition of Generative 3D from a tool for Digital Creation to a foundation for Industrial Reality.